Towards a Universal Decision Making Paradigm

My undergraduate research tackled how we could learn new behaviors from data: what are scalable ways we can learn to perform sequential decision making?

I gave a talk summarizing this research (~= my thesis), for which you can find the slides here.

Unsupervised reinforcement learning

My early work (AOP, LiSP) targeted the challenging task of adapting to new environments without catastrophically failing (given a single life). This requires confidence in the agent’s skill capability before execution, as poor execution could lead to disastrous situations that are difficult/impossible to escape from. I found purely online RL algorithms to struggle here, as they both were unstable and, in some sense, required a failure before they could learn to avoid it.

At the time, I became convinced of the power of offline data-driven RL: learning broad world knowledge before stepping foot into the new environment, rather than starting from scratch. I studied unsupervised RL methods on top of learned dynamics models, trying to imbue the agent with a variety of skills without real-world interaction. A couple works I was a supplementary author on (URLB, L-GCE) also studied unsupervised learning objectives in the context of online RL.

Sequence modeling

My later work (Decision Transformer, Pretrained Transformers as Universal Computation Engines, Text-DT) studied how we could adapt the growing paradigm of large language models for our settings. While RL typically used small, shallow networks, the growing field of language models found that performance could reliably improve as the model and datasets grew bigger. Our key results was that this sequence modeling objective was a powerful method to teach models multimodal decision-making capabilities, using offline datasets.

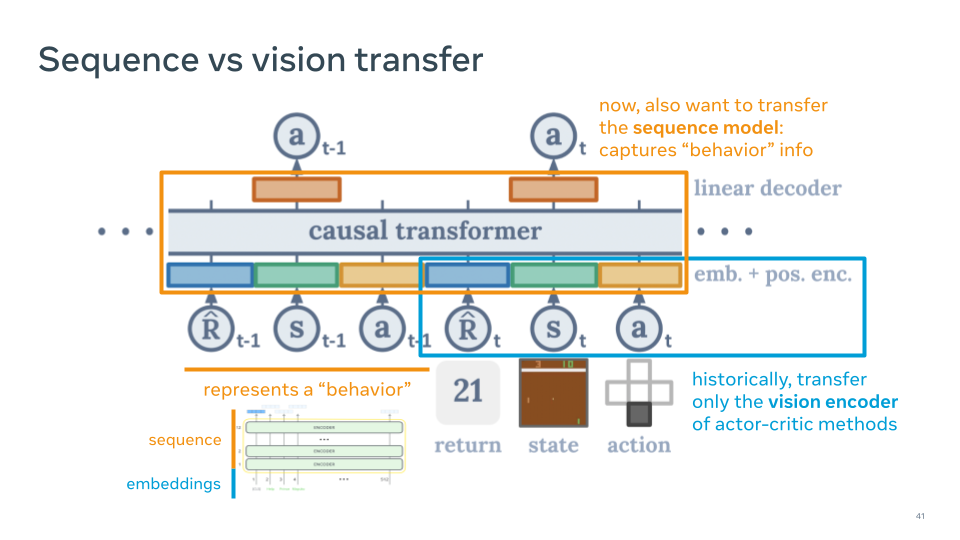

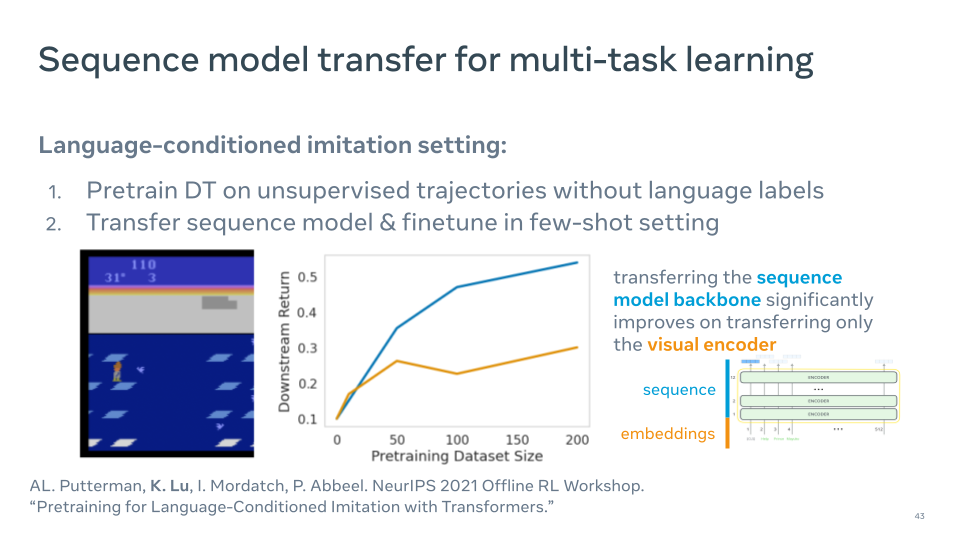

Decision Transformer focused on conditional sequence modeling – conditioning on both the reward and prior actions – which let the model learn compositional information from large datasets. Crucially, rather than transferring only the knowledge of the vision encoder as in prior work, I thought the real power was in transferring the sequential behavioral knowledge of the transformer:

And training on multimodal text labels enables better asymptotic scaling laws:

These continue to be active interests of mine – if you’d like to chat, feel free to send me an email!

Notes mentioning this note

There are no notes linking to this note.