I am currently a researcher at Thinking Machines.

I was previously a researcher at OpenAI, where I worked on reinforcement learning and synthetic data. I graduated from UC Berkeley, where I worked on reinforcement learning and offline sequence modeling. I was fortunate to be advised by Pieter Abbeel and Igor Mordatch.

Email: matches my arxiv papers

Blog

-

Jul 2025 — The Only Important Technology Is The Internet

How can we continue to scale large language models?

-

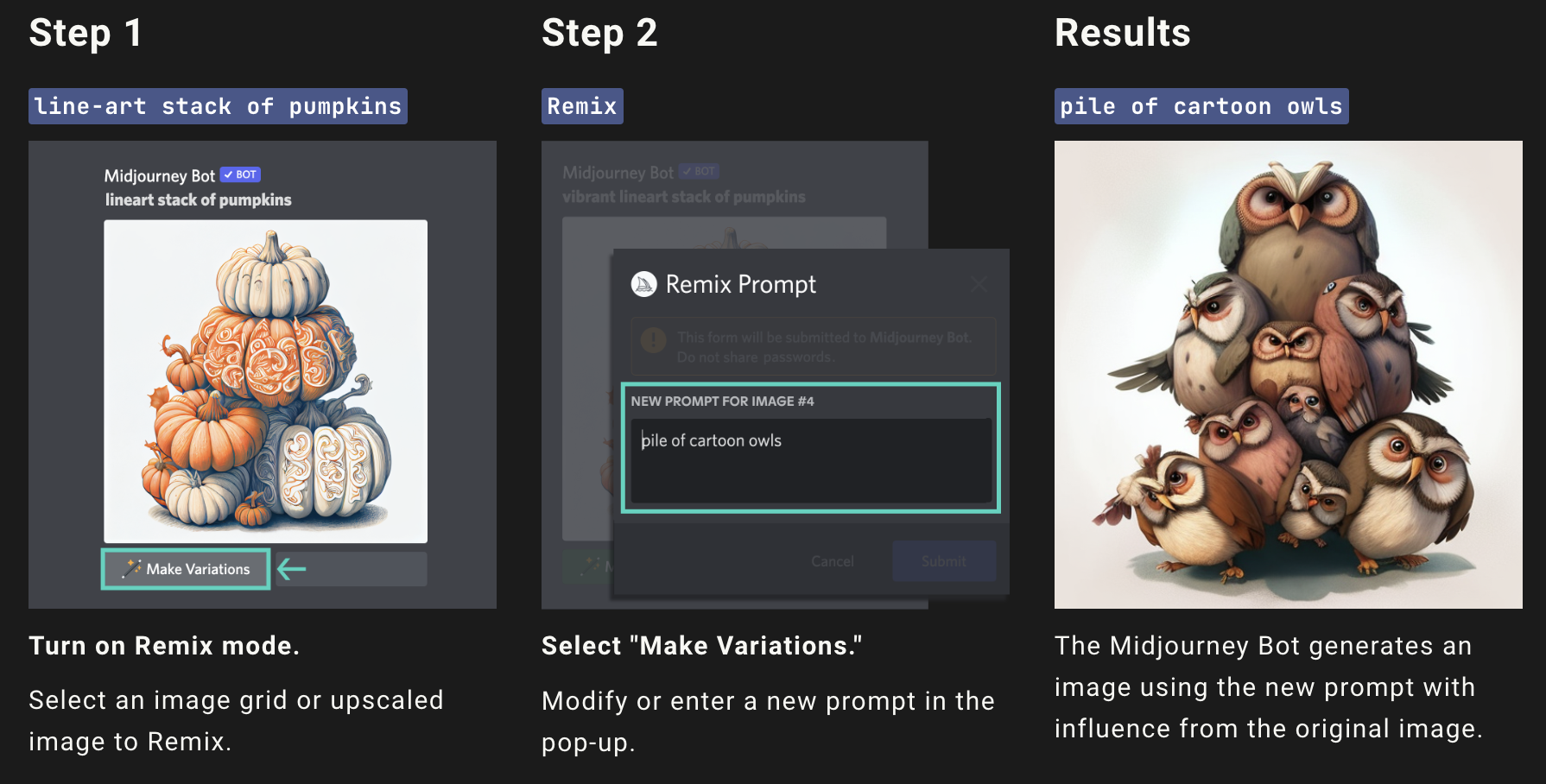

Jun 2025 — AI Models for Pokemon Games

What can Pokemon teach us about designing interactive agents?

-

Mar 2024 — Spending Inference Time

How should we structure inference compute to maximize performance?

-

Feb 2024 — LoRAs as Composable Programs

How can we design LLMs to be future-proof operating systems?

-

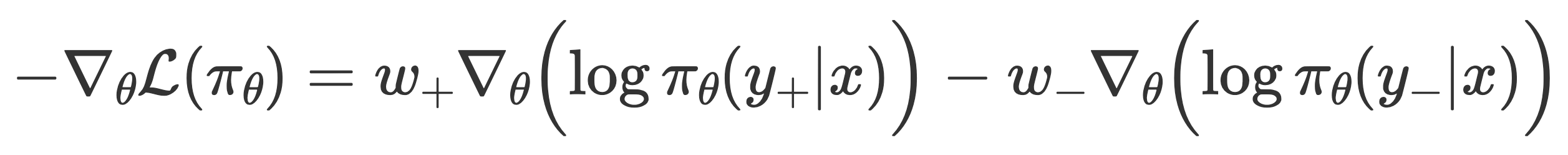

Jan 2024 — Unifying RLHF Objectives

What are different RL algorithms actually optimizing?

Papers

-

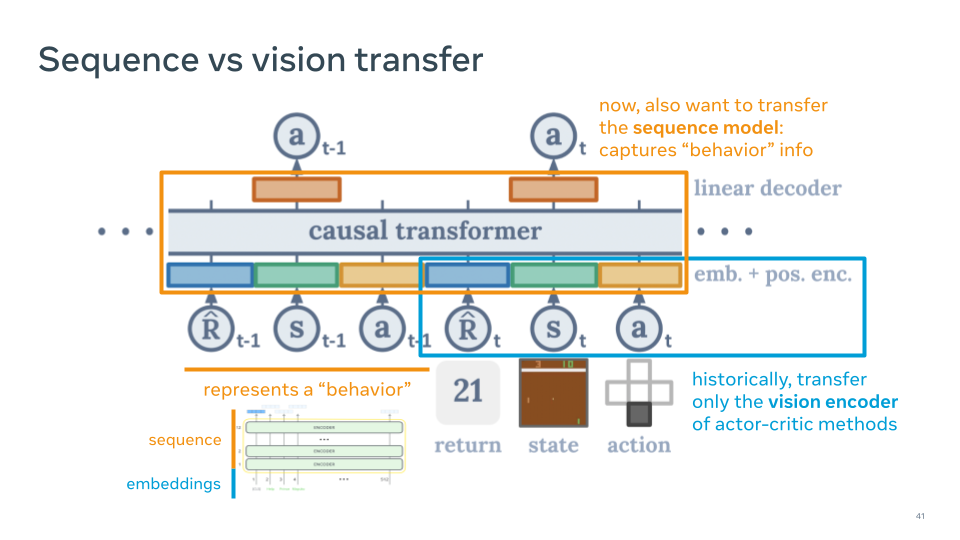

Jun 2021 — Decision Transformer

How can we perform reinforcement learning with autoregressive sequence models?

-

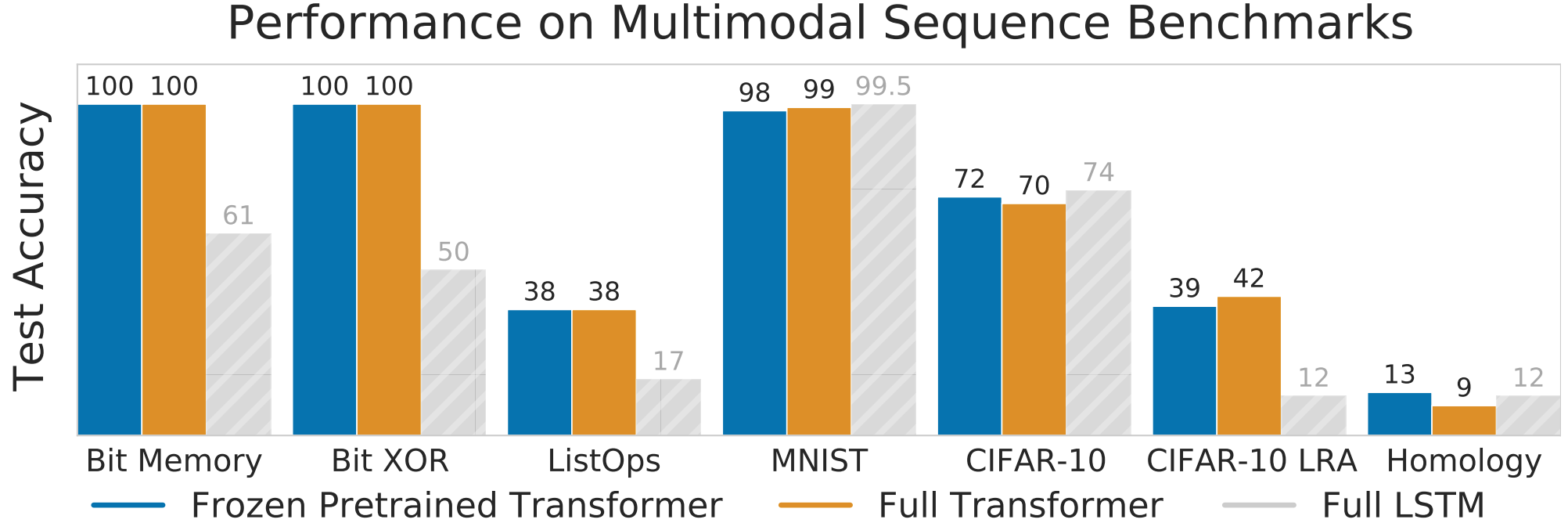

Mar 2021 — Pretrained Transformers as Universal Computation Engines

What are the limits of transfer of large pretrained language models?

-

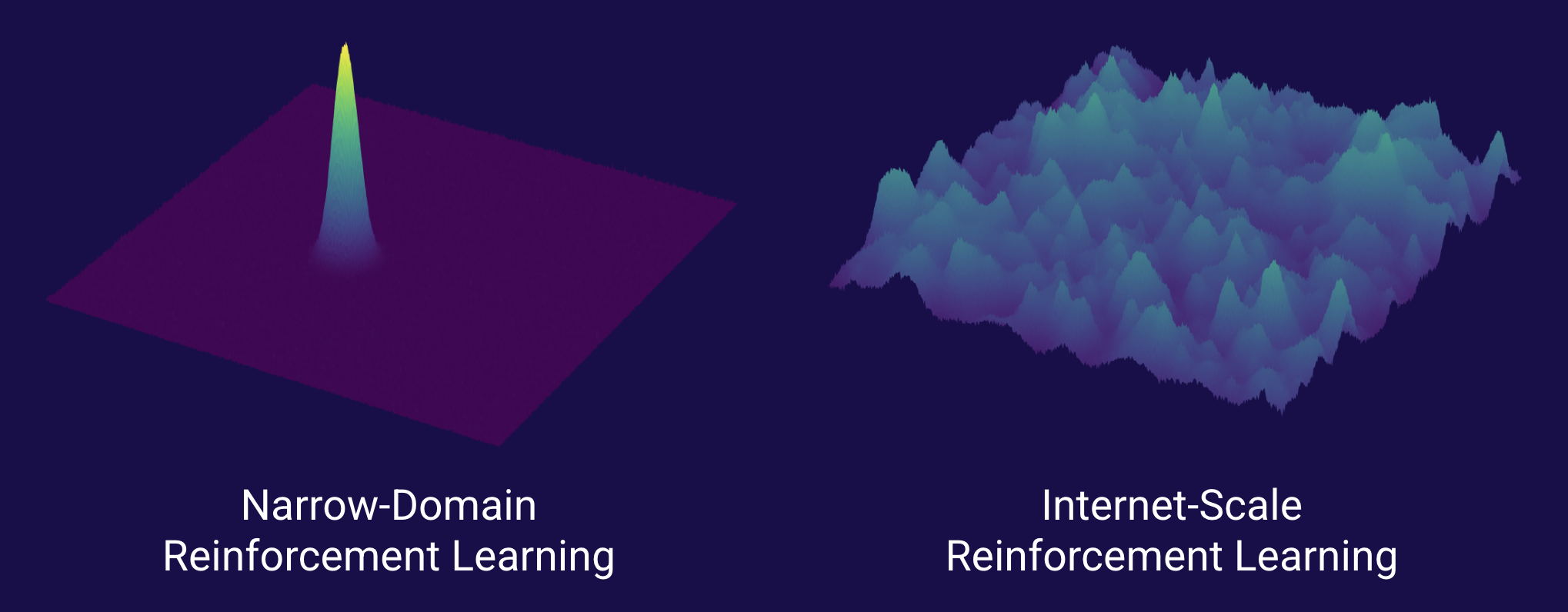

Summary — Towards a Universal Decision Making Paradigm

How can we design a universal learning method for sequential decision making?